I guess the odds are in my favor because, despite giving myself a 62-percent chance of continuing the series, here we are! (Read my first Then2Now article covering the history of computing to understand what I’m talking about there.) This is the second article in my Then2Now Series. In this article we’ll explore the history of networking and progress to where we sit today with the internet.

Let’s get one thing straight right up front. “The Internet” is a computer network. More specifically, the internet is a large number of independent computer networks connected together for the purpose of sharing data. So, when we say “The Internet,” we are simply speaking of a network on a very very large scale.

No reason to drag this out, let’s get started with…

What Is A Network?

According to Oxford Languages, a network is a group or system of interconnected things. Since we are focused on computing devices in these articles, our networks consist of a group of interconnected computers; that is, computers that can communicate with one another to share data.

Is There Only One Kind of Network?

Oh heck no! There are dozens, perhaps hundreds, of different kinds of networks using different protocols (the way the computers talk to each other) and different physical layer components (the wires, fibers, and specialized electronic components that connect each computer to all the others). Right now, you’re probably using at least two but likely four or five different types of network to get this article off our server and onto your computing device. For example:

- Network 1 — Your laptop is connected via WiFi to a wireless access point. The radio signals that allow those two devices to communicate are one kind of physical layer component and they have a specific protocol used to transmit and receive data.

- Network 2 — That wireless access point (WAP) is connected to other networking equipment in your home or office. The WAP connects to a router (for example) via ethernet–the cables you commonly use to plug one network device into another. The router, in turn, is connected (likely via ethernet) to other devices ultimately reaching something like a cable modem (if you are at home).

- Network 3 — Your cable modem accepts data from the other devices on your network and translates that data from an ethernet signal to something suitable to be sent over the cable lines.

- Network 4+ — From there it gets a bit murky because the data you’re sending could get translated half a dozen times as it traverses multiple other networks on its way to its destination. It could get translated to fiber optics, and then to microwave, and then back to some other terrestrial copper-based network. Ultimately, your data ends up getting translated back into a high-speed ethernet variant so that it can connect to our server and request this webpage.

Then it has to do it all in reverse to get back to your computer! So you see, something as simple as reading this article requires the use of multiple different networks and types of networks to get the words (the data) from our server to your computer.

How Did Networking Start?

If you read the first article in this series, Then2Now: The History of Computing, you will learn that most computers around the world were primarily stand-alone devices until around the late 1970s. That’s when computer networks as we know them began to become mainstream. As early as the late 1950’s, however, scientists and those working for the U.S. Military were working on ways to allow computers to share information. The first widespread example of this is the Semi-Automatic Group Environment (SAGE) radar system which connected multiple military radar installations to provide a unified picture of the airspace over a wide area. This system was primarily used to detect possible air attacks most likely from the Soviet Union. (Photo above courtesy Steve Jurvetson, flickr. If you have a minute, go read Steve’s description of how they conducted troubleshooting when something went wrong. It’s interesting reading!)

If you read the first article in this series, Then2Now: The History of Computing, you will learn that most computers around the world were primarily stand-alone devices until around the late 1970s. That’s when computer networks as we know them began to become mainstream. As early as the late 1950’s, however, scientists and those working for the U.S. Military were working on ways to allow computers to share information. The first widespread example of this is the Semi-Automatic Group Environment (SAGE) radar system which connected multiple military radar installations to provide a unified picture of the airspace over a wide area. This system was primarily used to detect possible air attacks most likely from the Soviet Union. (Photo above courtesy Steve Jurvetson, flickr. If you have a minute, go read Steve’s description of how they conducted troubleshooting when something went wrong. It’s interesting reading!)

Through the 1950s and 1960s engineers were regularly able to connect two computers together for data sharing. A well known example of this is the SABRE system in which two mainframe computers were linked for the purpose of sharing airline / passenger registration data.

At the same time, scientists were developing what we now call a packet-switched network, a technology that continues to form the underpinnings of today’s high-speed networks. Those diligent researchers eventually succeeded in 1969 by connecting four computers together–one each at the University of California at Los Angeles, Stanford Research Institute, UC Santa Barbara, and the University of Utah. These four first networked computers formed the basis of what was to be known as ARPANET–the Advanced Research Projects Agency NETwork. ARPANET ran at a blazing 50 Kbit/s. Compare that to a very common-by-todays-standards 100 Mbit/s connection which is about 2,000x faster.

Packet-Switched Networks

This idea of a packet-switched network allowed amazing advances in technology. To understand what a packet-switched network is, let’s compare it to a circuit-switched network and, in turn, relate both of those to physical technologies that you’ve probably used often in your life.

You can think of a circuit-switched network in the same way that you think of a telephone call. One person calls another person, they talk to each other for a while, and then they terminate that connection. Neither person can get any information from any other telephone user while the two original people are on the phone together. (Ignore 3-way calling and other very recent mass connection methods.) Computer networks used to work this way as well. One computer would be directly connected to another computer, they would exchange as much data as they needed for as long as was necessary, and then they could terminate that connection. No other systems could provide data while the original two were connected.

You can think of a circuit-switched network in the same way that you think of a telephone call. One person calls another person, they talk to each other for a while, and then they terminate that connection. Neither person can get any information from any other telephone user while the two original people are on the phone together. (Ignore 3-way calling and other very recent mass connection methods.) Computer networks used to work this way as well. One computer would be directly connected to another computer, they would exchange as much data as they needed for as long as was necessary, and then they could terminate that connection. No other systems could provide data while the original two were connected.

Compare that to a packet-switched network which is much more similar to the postal mail system. Any person can write a letter to any other person anywhere in the world. The first person writes their letter, puts their letter into an envelope, applies the necessary addresses and postage, and places that letter into the mail system via a mailbox or post office. The mail carrier and postal system figure out where the letter comes from, where it’s going to, and gets the letter to the destination as efficiently as possible. At the same time that you’re sending letters, you can be receiving them–not only from one person but from dozens or hundreds of people at the same time. The people to whom you are writing letters can also send and receive letters to many other people at will without regard to the letters they are receiving. No one is limited to sending a letter to only one other person at a time. Every letter gets to its destination correctly because each letter has the recipient’s address included. This way, the postal workers and sorting machines know exactly where a letter is supposed to go a to whom it should be delivered. Now imagine that you and anyone with whom you can communicate can read and write and send and receive 1,000 pieces of mail per second!

Compare that to a packet-switched network which is much more similar to the postal mail system. Any person can write a letter to any other person anywhere in the world. The first person writes their letter, puts their letter into an envelope, applies the necessary addresses and postage, and places that letter into the mail system via a mailbox or post office. The mail carrier and postal system figure out where the letter comes from, where it’s going to, and gets the letter to the destination as efficiently as possible. At the same time that you’re sending letters, you can be receiving them–not only from one person but from dozens or hundreds of people at the same time. The people to whom you are writing letters can also send and receive letters to many other people at will without regard to the letters they are receiving. No one is limited to sending a letter to only one other person at a time. Every letter gets to its destination correctly because each letter has the recipient’s address included. This way, the postal workers and sorting machines know exactly where a letter is supposed to go a to whom it should be delivered. Now imagine that you and anyone with whom you can communicate can read and write and send and receive 1,000 pieces of mail per second!

Using this analogy you can start to see why packet-switched networks are so much more preferable to circuit-switched networks.

Using this analogy you can start to see why packet-switched networks are so much more preferable to circuit-switched networks.

(Cute little cartoon envelope over there courtesy of kongvector.)

ARPANET to Internet

This era spans from about the 1970s through the mid-to-late 1980s and, boy howdy, were there a lot of technologies tried and abandoned! Included in those technologies were things like token ring networks where computers were connected by one long cable that ran in a literal circle. The computers took turns putting data onto their little network circle by passing around a virtual token. Only the computer with the token could place data on the network and when it was done it passed the token to the next computer in the ring. This is reminiscent of what we used to do in kindergarten where you and all your classmates would sit in a circle, the teacher would give one students a pillow (or a marker or whatever little trinket the teacher had handy), and only the person with the pillow (trinket) was allow to talk. It got the job done but it really wasn’t very efficient.

We also experimented with things like a bus-topology or daisy-chain topology networks. In that configuration all computers were connected directly in a long line with a single cable. Data would start at one end and work its way down to the other end and then back again. As you can imagine this wasn’t the most efficient way to pass data among several computers and in the event there was a problem in the network cable, all communication stopped until it was repaired.

Finally we landed on what’s known as a star-topology network. In this style, each computer is connected directly to a central computer or server. The server is responsible for storing data, passing data to each end-user computer, and, in the event one end-user needed to send data to another end-user, facilitating communication between these systems. Often the server would also be connected to a printer so all the end-users could share the one printer as well. Originally engineers placed an actual computer server in the middle of the star. In modern star-networks, end-user computers are generally connected to a network switch whose function is only to pass data not to store it.

Star-topology networks have proven to be the backbone of modern network layouts. All systems are connected to a central node, that central node is connected to other central nodes, and data can be efficiently sent from one system to any other. If any single wire or connection is broken, the whole network still functions normally, just without the system on that one wire.

We have also developed other network topologies such as tree topology and mesh topology. Generally speaking each configuration discussed here has its pros and cons and network engineers will choose a combination of the best technologies to fit any given situation. Often, networks are a hybrid of many of the configurations discussed.

So What’s the Internet, Then?

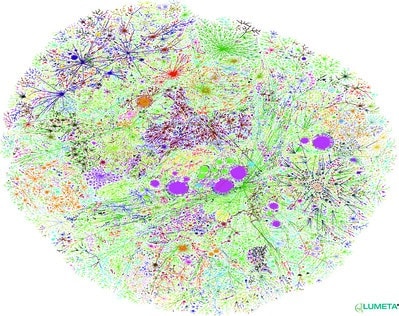

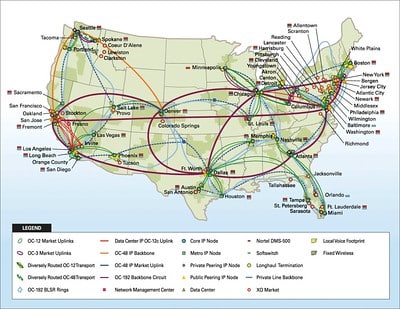

Imagine that 10,000 companies all set up their own networks in the way they think is optimal. Then another 10,000 companies did the same and also randomly connected some of their systems to the first set of companies. Then another 10,000 companies did the same thing and randomly connected their systems to the first two groups of 10,000. …and this happened over and over and over again. Add on top of this a handful of companies who have made it their business to strategically build ultra-high-speed, ultra-high-capacity connections between a relatively small number of the meshed networks we’ve described. That’s basically the internet–a redundantly interconnected group of independent networks. In this configuration there is one optimal way to get data from one point to another…but there are almost limitless possible ways if the optimal way is unavailable.

Imagine that 10,000 companies all set up their own networks in the way they think is optimal. Then another 10,000 companies did the same and also randomly connected some of their systems to the first set of companies. Then another 10,000 companies did the same thing and randomly connected their systems to the first two groups of 10,000. …and this happened over and over and over again. Add on top of this a handful of companies who have made it their business to strategically build ultra-high-speed, ultra-high-capacity connections between a relatively small number of the meshed networks we’ve described. That’s basically the internet–a redundantly interconnected group of independent networks. In this configuration there is one optimal way to get data from one point to another…but there are almost limitless possible ways if the optimal way is unavailable.

On top of that, you have many different protocols (think languages) used on the different networks. Each different protocol is optimized for the distance and speed it’s traveling. It’s also optimized for the transmission medium be it copper wire, fiber optic, wireless, or something completely different.

So What’s Next?

Scientists, engineers, and data nerds of all flavors and from all over the world are working tirelessly to figure out the answer to that question. In the short term I predict incremental changes along the lines of what we’ve seen in the past. We have kind of hit a cap on the speeds that our current copper wires and protocols can deliver. There may be a new local protocol introduced which allows us to increase those transmission rates but I have my doubts as to whether it will require a new type of wire–there’s simply too much ethernet cabling installed across the country for that.

Likewise, scientists will figure out how to squeeze more and more wavelengths of light into optical fiber thereby increasing the capacity of networks already in place. An alternative there is to create new data transmission techniques using new fibers. In fact, in 2021 researchers in Japan did exactly that and smashed 319 terabits per second through a four-core optical fiber. That’s like downloading 10,000 movies in one second. (The linked article says 80,000 but they are obviously bad at math and technology.)

Long term I expect quantum networks to augment our existing data transmission needs. This technology could allow data rates that are orders of magnitude above what we can achieve today. After all, who wouldn’t want to download on million 4K full-length movies per second?

Then2Now Series:

- Then2Now: The History of Computing

- Then2Now: An Obnoxiously Brief History of Networking and The Internet (current article)

Leave a Reply